Jacek Galowicz · 9 min read

Meltdown

Meltdown is an attack on the general memory data security of computers with the Intel x86 architecture. Two members of the founder team of Cyberus Technology GmbH were among the first experts to discover this vulnerability. This article describes how Meltdown works and examines the mitigations that have been patched into the most widespread operating systems while the information embargo was still intact.

Meltdown is an attack on the general memory data security of computers with the Intel x86 architecture. Two members of the founder team of Cyberus Technology GmbH were among the first experts to discover this vulnerability. This article describes how Meltdown actually works and also examines the mitigations that have been patched into the most widespread operating systems while the information embargo was still intact.

Rumors

The rumor factory has already been working overtime for about a week now and a lot of IT blogs and online newspapers wrote about it:

- Cyber.wtf (Blog): Negative Result: Reading Kernel Memory From User Mode

- python sweetness (Blog): The mysterious case of the Linux Page Table Isolation patches

- The Register: Kernel-memory-leaking Intel processor design flaw forces Linux, Windows redesign

- Intel: Intel Responds to Security Research Findings

The Exploit

In short: It is possible to exploit the speculative execution of x86 processors in order to read arbitrary kernel-memory.

Thomas Prescher and Werner Haas, two of the founders of Cyberus Technology GmbH, were among the first experts to discover this bug. We disclosed this information responsibly to the Intel Corporation and adhered to the information embargo which was officially dropped by Intel on January 3rd, 2018.

A fully complete and detailed explanation can be found in the official Meltdown paper.

Implications

A short summary of what this security bug means:

- Code utilizing this exploit works on Windows, Linux, etc., as this is not a software- but a hardware issue.

- It is possible to dump arbitrary kernel memory with unprivileged user space applications.

- Reading kernel memory implies reading the memory of the whole system on most 64 bit operating systems, i.e. also memory from address spaces of other processes.

- Fully virtualized machines are not affected (guest user space can still read from guest kernel space, but not from host kernel space).

- Paravirtualized machines are affected (e.g. Xen PV).

- Container solutions like Docker, LXC, OpenVZ are all equally affected.

- The Linux KPTI / KAISER patches mitigate Meltdown effects on Linux.

- Most modern processors, in particular with Intel microarchitecture since 2010, are affected.

- We have no evidence that this attack is successfully deployable on ARM or AMD, although the underlying principle applies to all contemporary superscalar CPUs.

- Meltdown is distinct from the Spectre attacks, which became public at the same time.

Press Contact

If you have any questions about Meltdown and its impact or the involvement of Cyberus Technology GmbH, please contact:

| Werner Haas, CTO | Tel +49 152 3429 2889 | Mail werner.haas@cyberus-technology.de

Example Attack

Within the scope of research we were able to implement a proof-of-concept that is able to reliably dump kernel memory from arbitrary addresses:

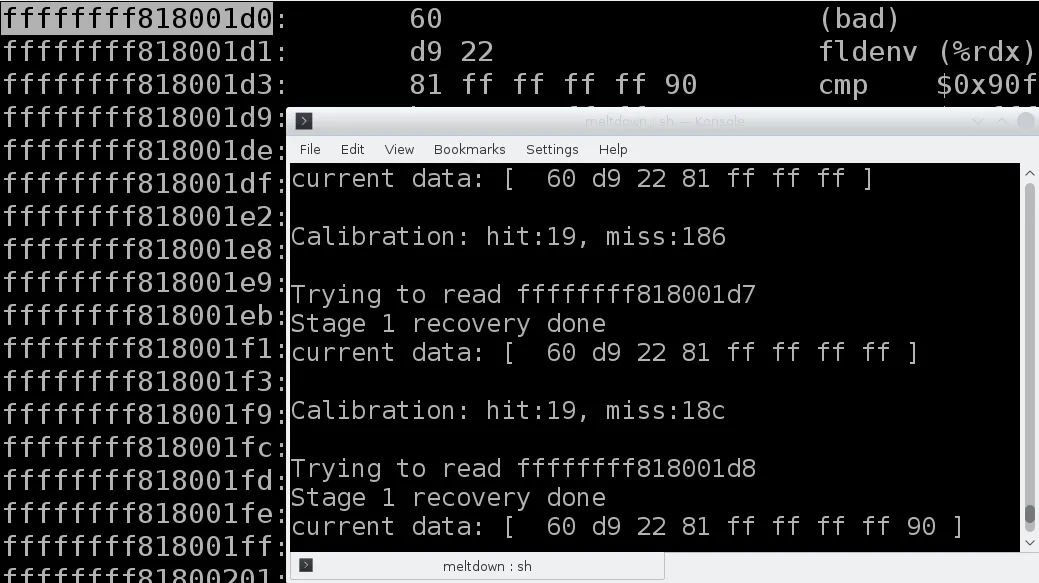

In this example run, we dumped a part of the system call table (which is usually not accessible from user space) of one of our systems (we tried Sandybridge, Broadwell, and Haswell chipsets) running on one of the latest Linux kernels before the KAISER patch set was deployed. The application runs as unprivileged user program.

The frontmost terminal window shows our proof-of-concept application dumping the first bytes of the system call table (0x60 0xd9 0x22 0x81 0xff 0xff 0xff 0xff 0x90). In order to enable the comparison with the real memory content, the terminal window in the background shows a legitimate kernel dump with just the same memory values. A comparison of both outputs shows that the exploit technique has been successfully utilized.

Technical Background

In a nutshell: Meltdown effectively overcomes the kernel space/user space memory isolation barrier of x86 architecture. The access happens indirectly by exploiting side-channel information that is inadvertently made available by the cache after speculative execution of a prepared instruction stream that is executed out-of-order.

The root cause of the problem is the combination of the following subsystems of a processor’s microarchitecture. Note that none of the individual components is to be blamed for the vulnerability - it is how they work together:

Virtual Memory

The virtual memory mapping from virtual to physical addresses enables the operating system to isolate processes spatially from each other. On top of that, the operating system can define which range of a memory view is accessible by user applications in order to secure kernel memory from unauthorized access.

This protection is the one actually getting bypassed.

Memory Cache

Since memory access is generally slow compared to the pace the CPU works with, often-accessed memory is transparently cached in order to accelerate program execution.

It is possible to measure which spots of the memory are warm (cached) and which are cold (not cached), which is a crucial part of the attack.

Speculative Execution

On the one hand, from a macroscopic view, superscalar processors still execute machine code instructions sequentially. On the other hand, from a microscopic view, the contemporary designs relax the order in which the instruction sequence is processed in order to accelerate program execution by e.g. concurrent operation on instructions without data dependencies.

Within a pipelined core, the processor fetches instructions from memory, decodes what they are supposed to do, fetches operand data from registers and/or memory, executes them on the operand data, and then stores the results back in registers and/or memory. While doing so, it breaks down each instruction into atomic parts.

A super scalar CPU has multiple processing units that can perform logical and arithmetical actions, as well as load/store units. With the instruction stream split into those atomic parts, a dynamic scheduler can intelligently dispatch instructions whose operands do not depend on each other’s completion to as many processing units as possible. This way the execution can be accelerated per core.

In order to keep the processing units filled up with as much work as possible to maximize this effect, the pipeline needs long uninterrupted instruction streams. Naturally, programs contain a lot of conditional and unconditional jumps, which chop the instruction stream in parts that are often too short to keep the pipeline filled.

By intelligently guessing the control flow path in the so called branch prediction unit (BPU) the pipeline can be fed with a continuous stream of instructions. A branch predictor deals with conditional jumps such that no cycles are wasted waiting for the resolution of the condition if the prediction is correct, hence accelerating program execution. In case of a mispredicted path, the pipeline is flushed and all intermediate results are discarded before they become visible in registers or memory. The front-end is resteered to the newly found instruction address and the CPU resumes working on correct program execution.

This kind of speculative execution does not only occur over branches: When a program accesses a specific cell of memory, the processor needs to decide if it is allowed to do so by consulting the virtual memory subsystem. If the memory cell has previously been cached, the data is already there and data is returned while the processor figures out if this access is legitimate. With speculative execution, the processor can trigger actions depending on the result of a memory access while working to complete the corresponding instruction.

If the memory access was not legitimate, the results of such an instruction stream need to be discarded, again. For a user application it is not possible to access the final result of any computation relying on such an illegitimate memory access. The interesting crux of this is that although retirement is correctly performed, all speculatively executed and then discarded instructions have still left some measurable effect on the cache subsystem…

Tying it All Together

By combining the characteristics of all these subsystems on a x86 CPU, we can execute the following machine code instructions from within an unprivileged user application (the code is a reduced version of the original proof-of-concept to remove complexity for the explanation):

; rcx = a protected kernel memory address

; rbx = address of a large array in user space

mov al, byte [rcx] ; read from forbidden kernel address

shl rax, 0xc ; multiply the result from the read operation with 4096

mov rbx, qword [rbx + rax] ; touch the user space array at the offset that we just calculatedThe first instruction tries to access one byte of memory at an address that resides within the protected kernel space, in order to load it into a processor register. The effect of this instruction is generally a page fault, because normal user space applications are not allowed to access kernel memory addresses. This page fault will eventually hit the application (although it is possible to avoid page faults using transactional memory), but there is a very high chance that the superscalar processor will speculatively execute the following two instructions.

The second instruction shifts the (cached) value that came from kernel space by 12 bits to the left, which is the same as a multiplication with the value 4096. This number is an implementation detail the official Meltdown paper explains more thoroughly.

The third instruction reads from a spot in the user space array that is indexed by the value the program just (illegally) read from kernel space and multiplied by 4096.

Of course none of this is really going to happen, at least not in a way that one could see the actual result in register rbx (where the final read result would go to).

Assume that the user space array is 4096 * 256 bytes large: The byte that the program reads from the kernel can have values ranging from 0 to 255. By multiplying such values with 4096, we can reach our large array at 256 different spots that are each 4096 bytes away from each other.

While none of these spots contains anything useful before or after this sequence of machine code instructions, it is possible to make sure that the whole user space array is completely uncached/cold before executing them. After trying to execute them, it is necessary to recover from the page fault that the processor reacts with. But then, one of the spots in the user space array remains cached!

Finding out the offset of the cached/warm spot of memory in the user space array allows for calculating the actual value that was read from memory, which can be done by measuring access timings on each of the 256 spots that could have been touched by the speculative execution. That is basically it.

Mitigation Techniques

It is possible to apply a software patch to the operating system kernel in order to mitigate the effects of the Meltdown attack:

KPTI / KAISER Patches

The KPTI / KAISER patches that have already been applied to the latest Linux kernel version help against Meltdown attacks. This series of patches by Gruss et al. ultimately splits user space and kernel space tables by unmapping all kernel memory pages before executing code in user space.

This patch brings a certain amount of performance degradation, but makes Meltdown access to crucial data structures in the kernel impossible.

A similar strategy helps to keep Windows and OS X kernels safe.