Philipp Schuster, Markus Partheymüller · 9 min read

Testing Virtualization Stacks by Utilizing Mini Operating System Kernels

Testing and debugging erroneous behaviour by a guest under a virtualization stack is hard and difficult. By leveraging multiple mini operating system kernels, we can investigate issues related to complicated topics, such as never delivered interrupts, with a precise focus on where to look at. For that, we created our internal Cyberus Guest Tests that we present in this blog post.

Targeted Low-Level Testing of Virtualization Stacks Matters

Real hardware is complex and comes with many sharp edges and caveats, so does proper virtualization. Having a good test coverage for low-level facilities, such as the virtualized interrupt delivery path, is crucial for a sustainable and correct code base, a good developer experience, and the long-term success of a business or project.

Writing unit tests, which normally execute on the host (under Linux or Windows), is not always feasible when testing certain aspects of a virtualization stack. For example, regular unit tests merely model how hardware actually behaves when different components work together, especially in the domain of asynchronous events, such as handling timer interrupts. A large part of the real environment would need to be recreated. Mocking hardware functionality is error-prone:

- The mock implementation can contain logic errors or false assumptions.

- The actual hardware does not match the test’s assumptions.

Our Solution: Cyberus Guest Test

To tackle this problem, we at Cyberus created our Cyberus Guest Tests. This soon-to-be open-sourced project exists internally since the beginning of our engineering efforts. Essentially they are mini operating system kernels that test isolated aspects of a typical operating system. We call them Guest Tests as they are meant to be executed as guest, i.e., in a VM.

They act as very lightweight integration tests that we can boot on real hardware as well as in a Virtual Machine Monitor (VMM). Some people might also call them unit tests for virtualization stacks, although they do not have much in common with traditional unit test frameworks, as mentioned above.

You can find the project on GitHub.

Solved Problems

Our Guest Tests bring two major benefits:

- Hard to debug errors, such as interrupt-related issues, are much easier to spot compared to when a Linux or Windows guest just freezes.

- They are excellent learning resources about how operating systems are built, thus help us to onboard new colleagues.

Over time at Cyberus, we leveraged and used various stock virtualization stacks, specialized forks of these, and proprietary projects to develop our products. Each project has its own CI pipeline where every single commit is tested thoroughly against a whole suite of tests.

Before these CI pipelines execute fully fledged integration tests leveraging Windows and Linux as VM guest, we run our Guest Tests first. This way, we can easily spot if something goes wrong before we have to debug a “Windows Guest freeze” bug. Occasionally, we still face situations where a Windows or Linux guest produces wrong behaviour, such as a freeze. Once we traced down the issue, we add minimal test cases to our Guest Tests to test the root cause of that failure in the future.

To name a specific example: We have a Guest Test for the LAPIC timer which tests its different modes in various test cases. This starts with basic test cases that expect one single interrupt in ONESHOT mode up to the PERIODIC mode where multiple interrupts are expected. The latter looks in code as follows:

TEST_CASE(timer_mode_periodic_should_cycle)

{

// Configure an interrupt handler.

irq_handler::guard _(lapic_irq_handler);

// Configure the timer for periodic mode and unmask the LVT entry.

write_lvt_entry(lvt_entry::TIMER,

lvt_entry_t::timer(

MAX_VECTOR,

lvt_mask::MASKED,

lvt_timer_mode::PERIODIC));

write_divide_conf(1); // Further LAPIC setup.

// Start timer.

write_to_register(LAPIC_INIT_COUNT, TIMER_INIT_COUNT);

// Wait for interrupt. Control flow continues once interrupt is received.

wait_for_interrupts(counting_irq_handler, EXPECTED_IRQS);

BARETEST_ASSERT((irq_count == EXPECTED_IRQS));

drain_periodic_timer_irqs();

}How these test cases report there results is discussed shortly in Console Output & Output Scheme.

Our Success Stories

During the development of the KVM backend for VirtualBox, we relied heavily on those tests, especially on the ones targeting the Local APIC. For instance, a common pitfall for virtualization software is when interrupts become pending, but the guest OS is not in a state where the interrupt can be actually delivered. In such cases, the VMM needs to instruct the hardware to transfer control back to the VMM as soon as all preconditions to deliver an interrupt are met. Errors in the implementation of interrupt handling can lead to dropped interrupts, causing hangs in the guest. With the Guest Tests, we can manufacture a situation where exactly one interrupt is pending, and finely control the conditions when interrupts can be delivered and when the VMM has to take special action.

Another example of the great debugging power of such tests just came up again while working on the nested virtualization support for our KVM backend. We will dive deeper into this fascinating bug hunting story in a future article, so stay tuned!

Real Hardware is the Reference

All our Guest Tests need to pass all test cases on real hardware, as real hardware is always the reference. Hence, no virtualization is involved. Once a (new) Guest Test passes all test cases on real hardware on multiple machines, we use them as part of the CI of our virtualization products.

This also means that in our Guest Tests CI Pipeline, we run all these tests on real hardware - but not virtualized. We only run a small “Hello World” Guest Test in various VMMs to ensure some basic infrastructure of the repository and the boot process of the Guest Tests work, but we do not want to have a broken pipeline just because some VMM fails one of our tests.

We would like to emphasize that neither QEMU/KVM, VirtualBox/KVM, Virtualbox/vboxdrv, nor Cloud Hypervisor/KVM are capable of properly executing all our Guest Test’s test cases successfully (yet). As real hardware is our reference, we can safely say that there are bugs in these virtualization stacks. To be fair, some of the test cases are quite obscure yet valid, especially in the field of interrupts.

In concrete terms, QEMU/KVM fails when passing the PIT timer interrupt via LINT0 as fixed interrupt:

TEST_CASE(pit_irq_via_lapic_lint0_fixed)

{

before_test_case_cleanup();

prepare_pit_irq_env(PitInterruptDeliveryStrategy::LapicLint0FixedInt);

BARETEST_ASSERT(not global_pic.vector_in_irr(PIC_PIT_IRQ_VECTOR));

BARETEST_ASSERT(global_pic.get_isr() == 0);

global_pit.set_counter(100);

enable_interrupts_and_halt();

// In QEMU(8.1.5)/KVM(6.8.6) the Interrupt is never delivered.

disable_interrupts();

BARETEST_ASSERT(irq_info.valid);

BARETEST_ASSERT(irq_info.vec == LAPIC_LINT0_PIC_IRQ_VECTOR);

BARETEST_ASSERT(not lapic_test_tools::check_irr(LAPIC_LINT0_PIC_IRQ_VECTOR));

lapic_test_tools::send_eoi();

BARETEST_ASSERT(irq_count == 1);

}Cope with Hardware Variety

Our Guest Tests are meant to run on a broad variety of x86 platforms. However, even if multiple platforms belong to the same platform family, there are subtle differences in available functionality. Our Guest Tests automatically disable certain functionality at runtime when they find such a condition. For example, the lapic-modes test only runs the x2apic tests if x2apic is present. Another example is the HPET timer, which is not available on all platforms. Its test cases only run when it is present.

We call them conditional test cases, and in code, they look like this:

TEST_CASE_CONDITIONAL(x2apic_msr_access_out_of_x2mode_raises_gpe, x2apic_mode_supported())

{ /* ... */ }Thus, conditional testcases are a trade-off to run a Guest Test on a broad variety of hardware belonging to the same overall platform without creating specialized boot items for every single machine.

Console Output & Output Scheme

As we explained in the previous article, each test prints text using serial and debug console output, which can be easily captured from VMMs (such as QEMU, VirtualBox, Cloud Hypervisor), but also on real hardware. The log follows our SoTest protocol that was specifically designed for those low-level applications. For example, the output of our “Hello World” Guest Test with three test cases looks as follows:

SOTEST VERSION 1 BEGIN 3

test case: test_boots_into_64bit_mode_and_runs_test_case

Hello from test_impl_boots_into_64bit_mode_and_runs_test_case

SOTEST SUCCESS "boots_into_64bit_mode_and_runs_test_case"

test case: test_test_case_can_be_skipped

skipping as condition is NOT met: `false`

SOTEST SKIP

test case: test_test_case_is_skipped_by_cmdline

skipping as test case is disabled via cmdline

SOTEST SKIP

SOTEST ENDCI Infrastructure

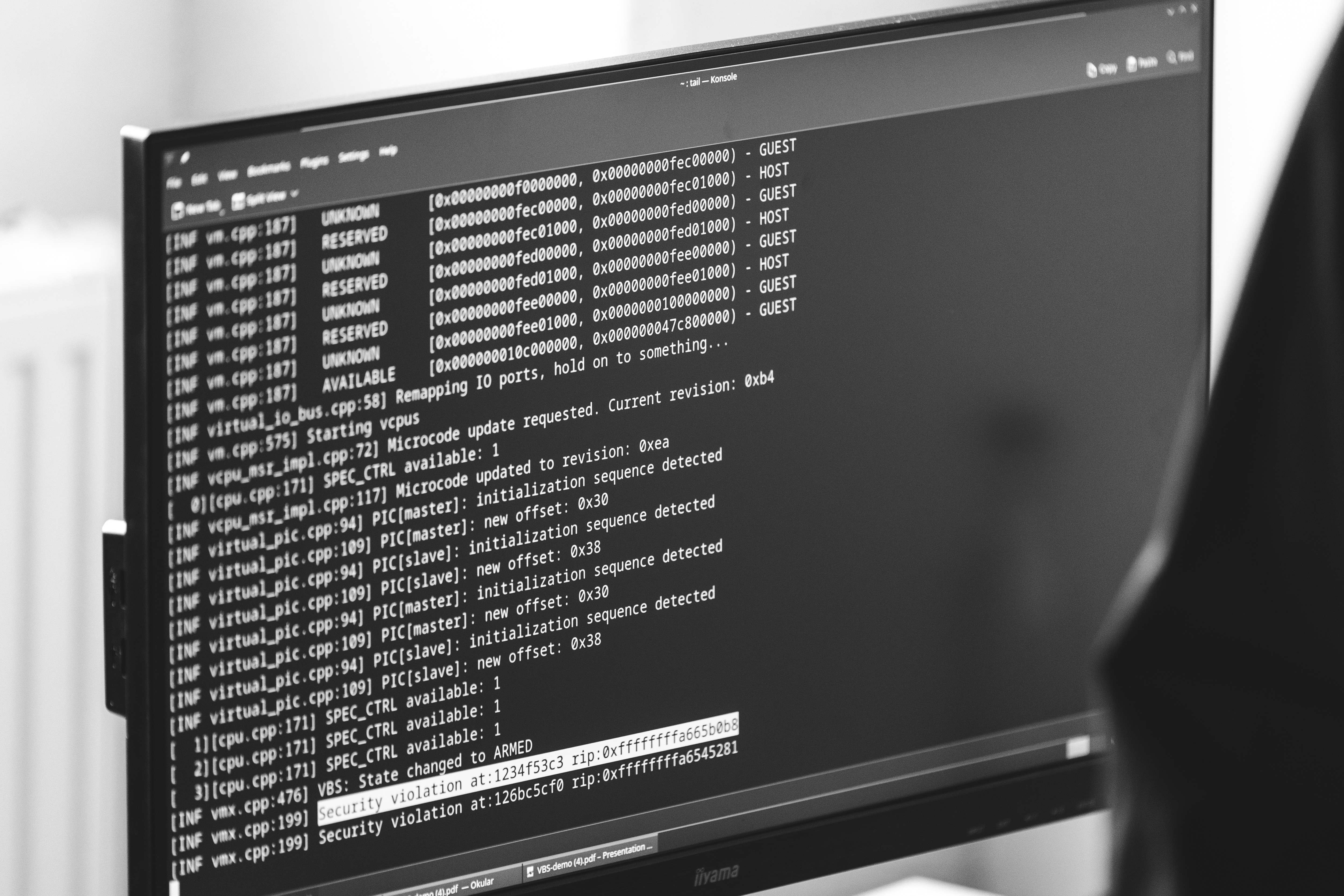

Using our SoTest platform, we can easily boot each test on multiple hardware platforms. The following screenshot shows you an excerpt from our SoTest overview for a test run of all Guest Tests, which is triggered by every single(!) commit on real hardware:

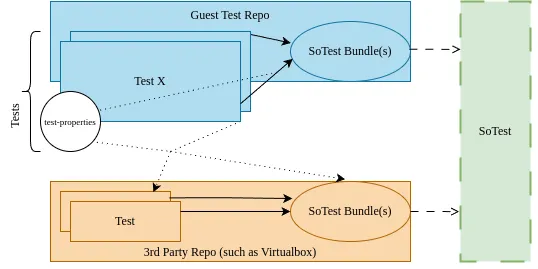

The CI of our Guest Test repository only tests the boot and execution on real hardware. For our work on VirtualBox, we schedule separate test runs in SoTest. We consume the Guest Test binaries and assemble dedicated test runs that boot VirtualBox in a Linux-based environment and start the Guest Test in a virtual machine. Linux and VirtualBox are configured so that all test output is forwarded to the serial device of the SoTest test machine. The following figure visualizes the relationship between the Guest Test repository, consumer repositories, and SoTest:

Coping with Broken Test Cases in a New CI Pipeline

When we onboard new virtualization stacks into our pipeline leveraging our Guest Tests, not all test cases necessarily pass out of the box, as mentioned earlier. We can use the cmdline of a Guest Test to disable certain test cases, which results in a green pipeline:

- We do not necessarily want to fix stock upstream VMMs but still run them in CI without errors.

- We do not want to exclude a whole Guest Test in a pipeline, just because one test case does not succeed (so far!).

Note that we either create a follow-up ticket to fix that issue or we come up with a reasonable explanation why it is not urgent or good enough to not fix this test case in the given product under the given circumstances.

Technical Details

Under the hood, each Guest Test is an ELF binary that is bootable via Multiboot (1 and 2) and XEN PVH on x86. For convenient use in VMM tests, we also package those tests as bootable ISO and EFI using GRUB as bootloader.

Each Guest Test shares the same base, called libtoyos, which bring the bootstrap processor (BSP) into 64-bit mode. On top of that, Guest Tests can define their test cases. Further, libtoyos provides various lightweight drivers for the IOAPIC, the LAPIC, the PIT, the PIC, the serial device, and other devices. Further, we have helpers to define interrupt handlers and to test interrupts based on side effects, busy waiting, or HLT-based waiting. Due to the nature of relying on asynchronous events in a blocking way, if an interrupt test case does never receive an expected interrupt, it is stuck. Hence, the test case will never report failure.

However, our test infrastructure takes care of tests that hang and never produce the final SOTEST END marker. In that case, the Guest Test is considered as failed.

Conclusion

Our Guest Tests are life-savers when it comes to hard-to-debug errors and bugs in virtualization stacks. Further, they are an easy-to-access environment for us to quickly prototype and test a small aspect of an Operating System on real hardware. Once we know what the hardware does, this is the reference that VMMs must fulfill.