Thomas Prescher, Markus Partheymüller, Stefan Hertrampf · 6 min read

KVM Backend for VirtualBox technical deep-dive

In this article, we want to provide you with a closer look under the hood of our KVM backend for VirtualBox. It replaces the original VirtualBox kernel module with a backend implementation for the KVM hypervisor provided by Linux.

Introduction

Virtualization solutions cover a wide range of functionality, ranging from management of VMs over providing virtual devices all the way to interfacing with the underlying hardware. One common distinction to separate the VM-facing parts from the OS-/hardware-facing ones is to use the terms Virtual Machine Monitor (VMM) and Hypervisor, respectively. A well-known example on Linux is the QEMU VMM used together with the KVM hypervisor. Often, this separation coincides with the privilege levels components are executed in: The hypervisor in kernel mode, the VMM in user mode.

VirtualBox is an all-in-one solution that spans the entire spectrum in a single, unified software product regardless of whether you’re running Windows, Linux, or macOS. It features a common code base for platform-independent parts (e.g., UI, virtual devices) and specialized backend implementations for different host operating systems and hardware flavors. On Linux, it comes with a user-facing VMM part, and an out-of-tree kernel module (“vboxdrv”) that implements the hypervisor part and contains the entire implementation of hardware virtualization for Intel and AMD CPUs.

VirtualBox Virtualization Backends

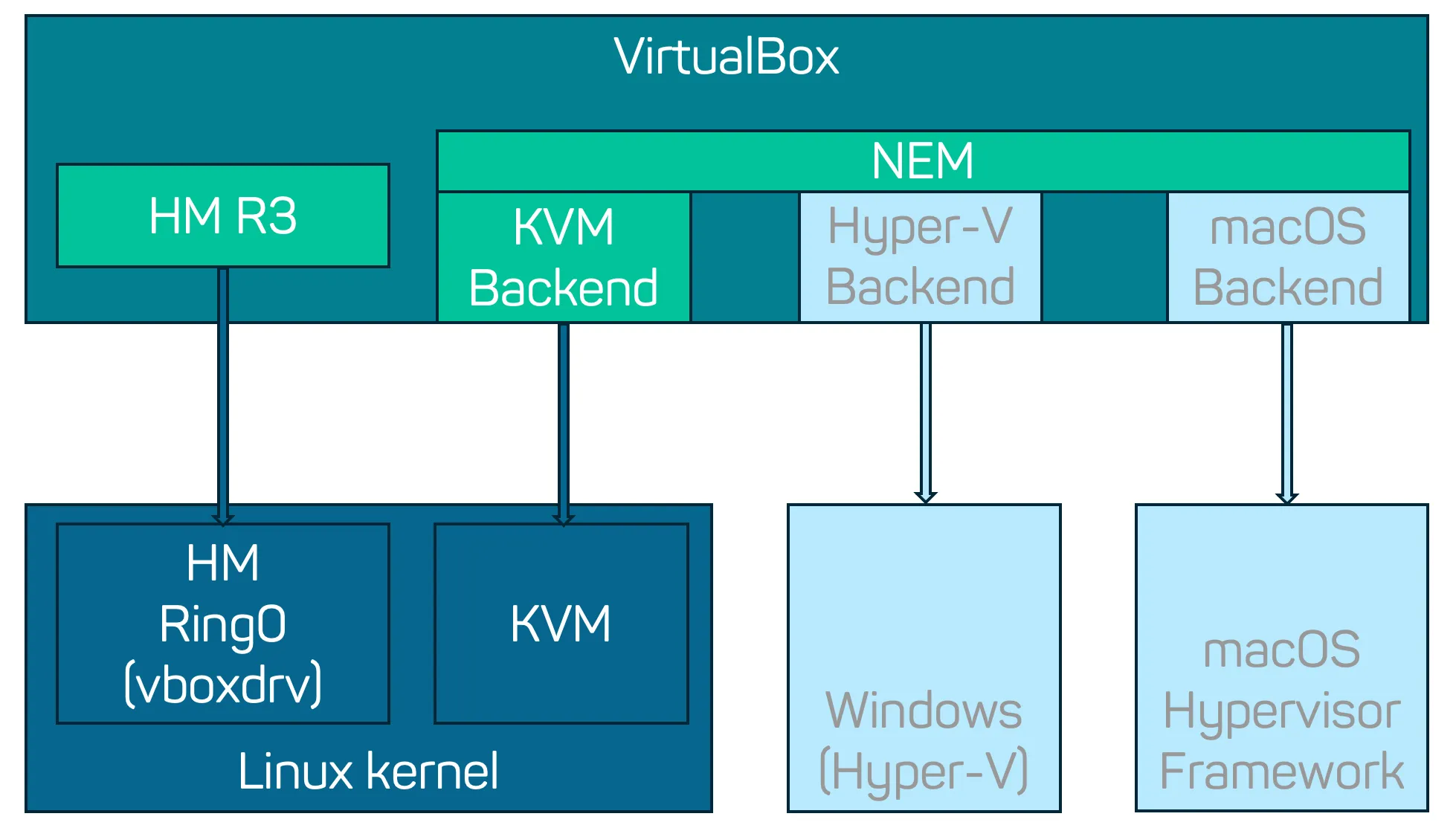

The VMM part of VirtualBox already incorporates support for multiple hypervisors to cater to different host operating systems. The Hardware Assisted Virtualization Manager (HM) is the standard backend on Linux systems and uses the vboxdrv kernel module for hardware virtualization. There are also backends for Windows (Hyper-V) and macOS (Hypervisor Framework), where large portions of this hardware-specific code are provided by the OS itself.

To facilitate implementing such different hypervisor backends in VirtualBox, Oracle introduced the Native Execution Manager (NEM). NEM provides a unified low-level virtualization interface for the platform-neutral part of the VMM and is already used to run on Windows and macOS. For KVM, VirtualBox only had an incomplete proof-of-concept version that was mainly used for testing the NEM interface on Linux.

KVM Hypervisor NEM Backend

Due to the fact that the original KVM backend implementation was never intended for production use, but more as a test vehicle for the NEM architecture, it was not possible to just swap the hypervisor portion. There were some minor fixes needed to obtain a successful build and the interrupt handling logic was missing important parts required for supporting Windows guests (e.g., CR8 control register handling). But even then, the performance of guest systems (especially Windows) was far from desirable. One very important indicator of Windows user experience is the latency of so called Deferred Procedure Calls (DPC). Using a latency monitor, we were able to observe large spikes and traced them back to the missing interaction between the KVM backend and the Time Manager (TM) in the main VMM code. VMMs must be able to re-gain control over the guest at specific points in time in order to inject interrupts correctly, so we added a signal timer that instructed the NEM to interrupt the guest whenever a scheduled action needs to be performed.

However, even with DPC latencies reduced to more acceptable numbers, the performance was still not sufficient. We discovered that the KVM backend was missing an important optimization: All interrupt handling for the guest system was done entirely by the VMM without the help of hardware virtualization features, such as hardware-assisted Task Priority Register (TPR) virtualization. This optimization is especially crucial for Windows guests, because the Windows kernel frequently uses the TPR to block interrupts below a certain priority. That’s why all virtualization solutions targeting Windows guests implement this optimization (see also this talk from KVM Forum 2008 about Intel FlexPriority).

Leveraging KVM interrupt virtualization: IRQCHIP support for the KVM backend

To use advanced hardware virtualization features for interrupts, the hypervisor needs to be involved in the interrupt handling. Because interrupt handling is always a critical component, there is a trade-off between security and performance. The shorter the path, the better the performance. In particular, adding transitions between kernel mode (where the hypervisor is) and user mode (the VMM) are costly. On the other hand, any additional code in the kernel brings a higher security risk. This is why KVM offers three modes of operation with respect to interrupts:

- All interrupt handling is done by the VMM (our starting point)

- Local APIC emulation in the hypervisor; I/O APIC and PIC in the VMM (in KVM terminology: “Split irqchip”)

- Local APIC, I/O APIC and PIC are all emulated in the kernel (in KVM terminology: “Full irqchip”)

For more information on KVM’s interrupt handling and the trade-off analysis, see this KVM Forum talk.

Our target for the KVM backend is the split irqchip, for multiple reasons. First, the most relevant performance gains can be achieved by handling the Local APIC in the kernel, unlocking hardware-assisted APIC virtualization. Second, VirtualBox offers a virtual IOMMU to its guests and this feature cannot be implemented using the full irqchip. Finally, we want to keep the amount of emulation in the kernel as small as possible while maintaining reasonable performance. The switch to this mode required adaptations to the virtual I/O APIC of VirtualBox in the way it sends virtual interrupts to the Local APIC, which is now handled in KVM. Furthermore, the functionality to save and restore running guest systems needed adjustments to capture the in-kernel interrupt controller state and restore it later on.

Summary

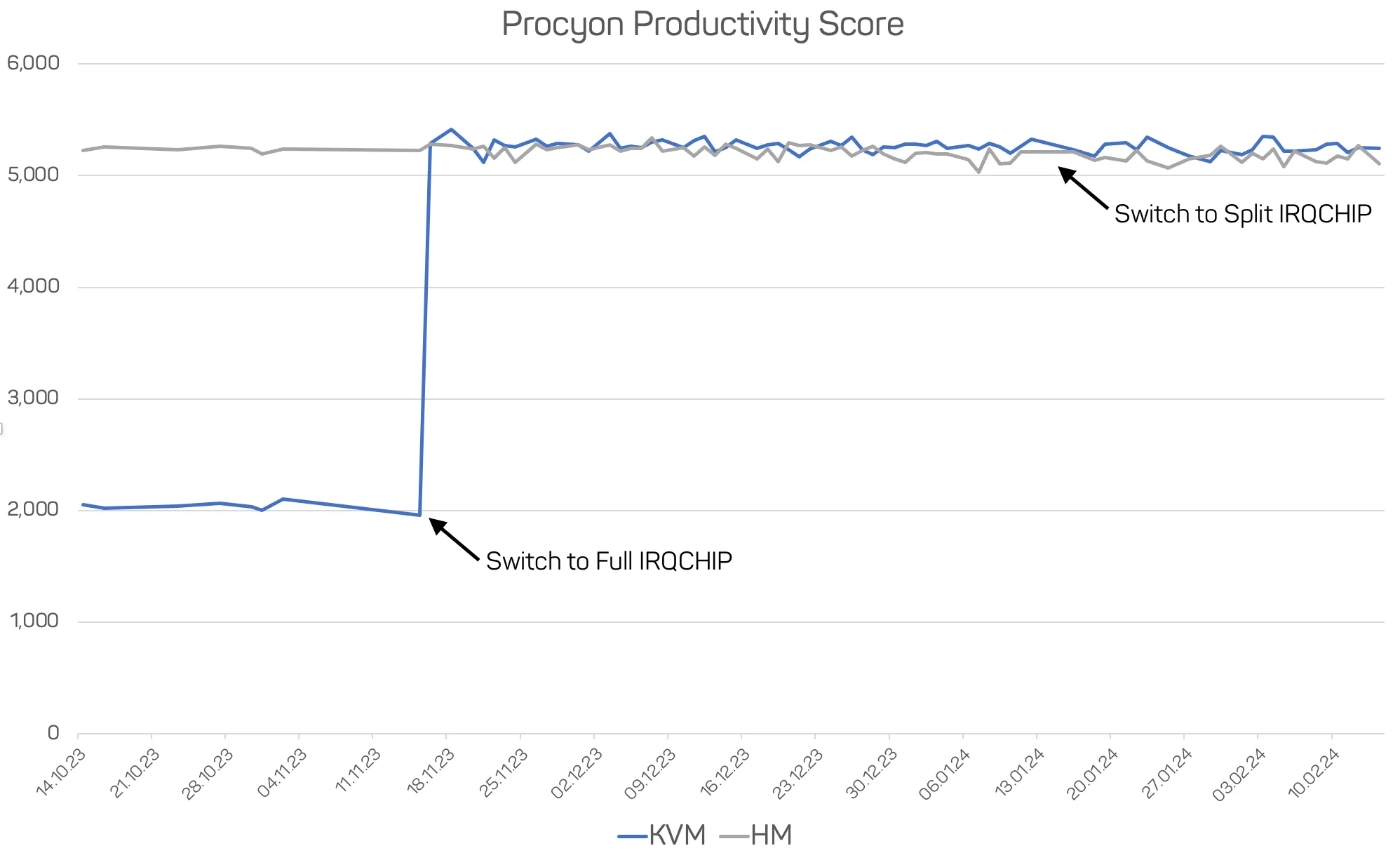

By implementing a KVM backend, we were able to run the user-space part of VirtualBox without any additional kernel modules, and also achieve comparable performance in common workloads like office productivity software. Especially the use of KVM’s interrupt virtualization provided a significant performance boost. In the graph below you can see the jump in benchmark scores after we activated the full irqchip. The subsequent move to the split irqchip did not impact these scores, which supports our assessment that most performance gains come from the in-kernel Local APIC emulation.

Procyon Office Productivity Suite scores, comparing KVM vs. HM implementation. Measured on a Lenovo ThinkStation P360 Tiny running a Windows 10 VM.

Procyon Office Productivity Suite scores, comparing KVM vs. HM implementation. Measured on a Lenovo ThinkStation P360 Tiny running a Windows 10 VM.

When we look at micro-benchmarks like disk speed, we can still observe the expected losses from moving device-specific emulation outside the kernel. In particular, virtualization-unfriendly devices like AHCI still have an edge in the HM implementation. We are planning to write a separate article about our benchmarking efforts as part of our automated CI in a later blog post, at which point we can also dive a bit deeper into the numbers we have obtained for the KVM backend.

Another caveat of removing the kernel modules that come with VirtualBox is that some convenience features around networking (e.g., bridged networks) are not easily available. These need to be configured manually in the host system (e.g., using tun/tap), which was sufficient for our use case. Building additional convenience functionality into the KVM backend for VirtualBox is currently not our focus.

Outlook / Future work

We are currently working to extend the use of KVM-provided Hyper-V paravirtualization features to further increase the performance of Windows guests. Another focus area is the support of nested virtualization to enable advanced Windows virtualization-based security features (VBS) and allow guests to run their own virtual machines.

Last, but not least, we also built support for accelerated graphics using Intel’s SR-IOV technology, which we will cover in an upcoming article. Stay tuned!